After its initial development in the last quarter of the 19th century, Cantor’s theory of the infinite has grown to become an essential part of the now broadly-accepted standard version of set theory. But even in Cantor’s time, and since then into the present, various mathematicians and philosophers have rejected Cantor’s theory of the infinite as inherently flawed, on the ground that completed, that is, finitized, infinities cannot exist (e.g., the set of natural numbers ℕ = {1, 2, 3, 4, … } does not and cannot exist as a completed entity), and the terms finitism and constructivism – to the extent that these terms refer to coherent concepts – have been used to describe the philosophical position and mathematical practice which is based on the strong curtailing, and sometimes outright denial, of the logical legitimacy of what Cantor believed he had done, i.e., the finitizing of the infinite, though this curtailing and denial were more prominent a century ago. In fact, Cantor’s theory of the infinite is inherently flawed, and the flaw is based on a subtle confusion of concepts. At the heart of this confusion is the continuum hypothesis (CH), which, unlike much of standard set theory and broader mathematics, is not a statement that only indirectly and approximationally relies on the assumption of the existence of finitized infinity; rather, it depends directly and intrinsically on this assumption. As such, CH is directly affected by the flaw in Cantor’s theory of the infinite, and this difference shows itself in certain ways: for example, CH has remained unsolved for a more extended period of time than typical problems and questions in mathematics and set theory, and will remain so as long as the flaw in the foundations of the theory continues to be implicitly accepted as true. If a problem is foundational, rather than strictly mathematical, then every mathematical effort to resolve the problem will ultimately fail, because such effort will always be made in the context of the flawed foundation; and a foundation, being those most essential principles, patterns, and assumptions on which all other ideas and conclusions are based, is always the part of our thought, and our worldview, that we cling to most stringently, and is thus the part most difficult to recognize as flawed if it is flawed, and the most difficult to change, especially if nontrivial parts of that foundation are valid and lead to fruitful areas of research, correct conclusions about the natural world, and logically consistent and meaningful mathematical results.

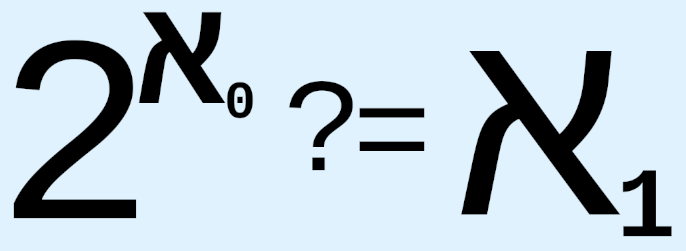

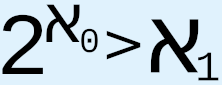

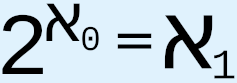

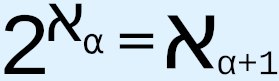

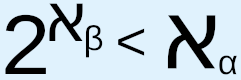

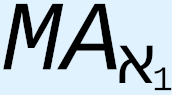

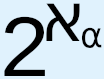

When Cantor proposed CH in 1878, he expended extraordinary effort to try to provide an answer, but, as we know, he failed. Since then, various results related to CH have been obtained, such as Gödel’s 1938-40 proof that CH cannot be disproved from the axioms of ZFC and Cohen’s 1963 proof that CH cannot be proved from the axioms of ZFC, which, respectively, are meant to prove that CH is both logically consistent with and independent of the axioms of ZFC. Other results include using the axiom of determinacy to show that  is not an aleph;1 that generalized CH entails the axiom of choice;2 that “the generalized continuum hypothesis is consistent with ZF ... and this result is stable under the addition of many large cardinal axioms”;3 that the axiom of constructibility implies generalized CH;4 the use of various large cardinal axioms, or, effectively, stronger axioms of infinity, that are tacked onto ZFC in order to propose the existence of larger and larger cardinals, such as “(in increasing order of size) strongly inaccessible, strongly Mahlo, measurable, Woodin, and supercompact cardinals,”5 in the hope that doing so will provide more information about the nature and structure of infinity, which we hope will in turn give us a greater chance of understanding and resolving CH and generalized CH; Woodin’s hypothesis known as the Star axiom, which would make

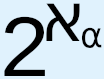

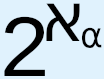

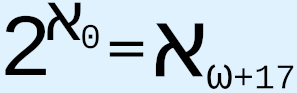

is not an aleph;1 that generalized CH entails the axiom of choice;2 that “the generalized continuum hypothesis is consistent with ZF ... and this result is stable under the addition of many large cardinal axioms”;3 that the axiom of constructibility implies generalized CH;4 the use of various large cardinal axioms, or, effectively, stronger axioms of infinity, that are tacked onto ZFC in order to propose the existence of larger and larger cardinals, such as “(in increasing order of size) strongly inaccessible, strongly Mahlo, measurable, Woodin, and supercompact cardinals,”5 in the hope that doing so will provide more information about the nature and structure of infinity, which we hope will in turn give us a greater chance of understanding and resolving CH and generalized CH; Woodin’s hypothesis known as the Star axiom, which would make  equal to

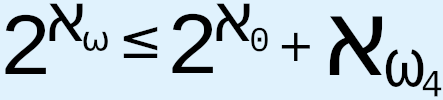

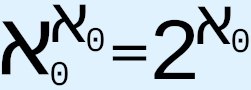

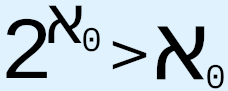

equal to  , invalidating CH, though “Woodin stated in the 2010s that he now instead believes CH to be true, based on his belief in his new ‘ultimate L’ conjecture”;6 the result of Saharon Shelah that “was able to reverse a trend of fifty years of independence results in cardinal arithmetic, by obtaining provable bounds on the exponential function. The most dramatic of these is

, invalidating CH, though “Woodin stated in the 2010s that he now instead believes CH to be true, based on his belief in his new ‘ultimate L’ conjecture”;6 the result of Saharon Shelah that “was able to reverse a trend of fifty years of independence results in cardinal arithmetic, by obtaining provable bounds on the exponential function. The most dramatic of these is  . Strictly speaking, this does not bear on the continuum hypothesis directly, since Shelah changed the question and also because the result is about bigger sets. But it is a remarkable result in the general direction of the continuum hypothesis”;7 the fact that Martin’s Axiom for

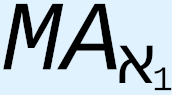

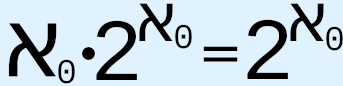

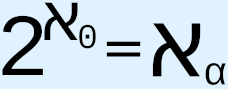

. Strictly speaking, this does not bear on the continuum hypothesis directly, since Shelah changed the question and also because the result is about bigger sets. But it is a remarkable result in the general direction of the continuum hypothesis”;7 the fact that Martin’s Axiom for  , i.e.,

, i.e.,  , "implies

, "implies  , the negation of the Continuum Hypothesis”;8 and many others. But results such as these either assume CH or generalized CH to be true in order to see what results follow from this assumption, or else take for granted that different levels of infinity exist and that infinite sets can be treated, a la Cantor, as finite, complete, conceptually concrete, mathematically manipulable entities. In the first case, if CH itself is a logically flawed statement, then one cannot prove the truth or falsity of CH by first assuming its truth or falsity, since no amount of logical deduction from this starting point will lead to valid logical conclusions that can be identified with valid logical conclusions from other areas of mathematics; also, since such comparisons, should we attempt to make them anyway, would depend on the validity of these other conclusions, they would be regressivist in nature and so subject to the potential uncertainties involved in regressivist arguments. In the second case, as we shall see, the assumption that infinity can be finitized is flawed, which means that mathematical investigations that are based on this assumption will always fail to resolve the continuum hypothesis.

, the negation of the Continuum Hypothesis”;8 and many others. But results such as these either assume CH or generalized CH to be true in order to see what results follow from this assumption, or else take for granted that different levels of infinity exist and that infinite sets can be treated, a la Cantor, as finite, complete, conceptually concrete, mathematically manipulable entities. In the first case, if CH itself is a logically flawed statement, then one cannot prove the truth or falsity of CH by first assuming its truth or falsity, since no amount of logical deduction from this starting point will lead to valid logical conclusions that can be identified with valid logical conclusions from other areas of mathematics; also, since such comparisons, should we attempt to make them anyway, would depend on the validity of these other conclusions, they would be regressivist in nature and so subject to the potential uncertainties involved in regressivist arguments. In the second case, as we shall see, the assumption that infinity can be finitized is flawed, which means that mathematical investigations that are based on this assumption will always fail to resolve the continuum hypothesis.

Other researchers take a different approach. For example, Solomon Feferman has argued that CH is not decidable, based on the idea that the concept of an arbitrary set of the reals – or any set with the same cardinality – is inherently vague and cannot be made more precise without violating the nature of an arbitrary set; and since the meaning of CH is tied to the concept of an arbitrary set of reals, CH is not a precise enough statement to have a truth value.9 Let us examine this argument more closely, as well as certain other things Feferman says in his writings on the subject. For example, Feferman states, “Levy and Solovay (1967) showed that CH is consistent with and independent of all such large cardinal assumptions, provided of course that they are consistent. So the assumption of even (Large) Large Cardinal Axioms (LLCAs) is not enough; something more will be required.”10 This is another indication, however indirect, that there is something different about CH when compared to many other problems in set theory. Feferman describes a certain perception among set theorists regarding CH: “There is no disputing that CH is a definite statement in the language of set theory, whether considered formally or informally. And there is no doubt that that language involves concepts that have become an established, robust part of mathematical practice. Moreover, many mathematicians have grappled with the problem and tried to solve it as a mathematical problem like any other. Given all that, how can we say that CH is not a definite mathematical problem?”11 He further states that “one can try to sidestep [difficult issues in trying to finitize infinity] by posing the question of CH within an axiomatic theory T of sets, but then one can only speak of CH as being a determinate question relative to T.”12 Notice the use of the phrases “in the language of set theory” and “relative to T,” which imply that CH can be thought of as a meaningful question within the context of a set theory that already assumes that it is possible to finitize the infinite, i.e., that it is meaningful to use and manipulate a symbol that represents infinity, such as ω or  , in the same way that we would use and manipulate symbols that represent finite quantities. Feferman’s assessment is correct here, and more will be said about this later. Further, in discussing the modeling of large cardinals and the components of CH in second-order logic, Feferman states, “Clear as these results are as theorems within ZFC about 2nd order ZFC, they are evidently begging the question about the definiteness of the above conceptions required for the formulation of CH… . But in relying on so-called standard 2nd order logic for this purpose we are presuming the definiteness of the very kinds of notions whose definiteness is to be established.”13 (Italics mine.) Again, this is correct: arbitrarily assuming that a concept is clear and definite, and using it as if it is, does not make it so. Feferman further states, “But it is an idealization of our conceptions to speak of 2ℕ, resp. S(ℕ) [the power set of ℕ], as being definite totalities. And when we step to S(S(ℕ)) there is a still further loss of clarity, but it is just the definiteness of that that is needed to make definite sense of CH.”14 (Italics mine.) This is also correct, as Feferman points to the incorrectness of the idea that we can treat infinities as finite things, and at least implies that there is inherent, i.e., ineradicable, vagueness in the conception of multiple levels of infinity; and he also points to the fact that logical validity and clarity of concepts is needed in precisely these things if the statement of CH is to be meaningful, and therefore to have an objective truth value (and not just a truth value in the context of an arbitrary set-theoretical framework which already assumes that infinities can be treated as finite quantities and that there are multiple levels of infinity). Again, more on this later.

, in the same way that we would use and manipulate symbols that represent finite quantities. Feferman’s assessment is correct here, and more will be said about this later. Further, in discussing the modeling of large cardinals and the components of CH in second-order logic, Feferman states, “Clear as these results are as theorems within ZFC about 2nd order ZFC, they are evidently begging the question about the definiteness of the above conceptions required for the formulation of CH… . But in relying on so-called standard 2nd order logic for this purpose we are presuming the definiteness of the very kinds of notions whose definiteness is to be established.”13 (Italics mine.) Again, this is correct: arbitrarily assuming that a concept is clear and definite, and using it as if it is, does not make it so. Feferman further states, “But it is an idealization of our conceptions to speak of 2ℕ, resp. S(ℕ) [the power set of ℕ], as being definite totalities. And when we step to S(S(ℕ)) there is a still further loss of clarity, but it is just the definiteness of that that is needed to make definite sense of CH.”14 (Italics mine.) This is also correct, as Feferman points to the incorrectness of the idea that we can treat infinities as finite things, and at least implies that there is inherent, i.e., ineradicable, vagueness in the conception of multiple levels of infinity; and he also points to the fact that logical validity and clarity of concepts is needed in precisely these things if the statement of CH is to be meaningful, and therefore to have an objective truth value (and not just a truth value in the context of an arbitrary set-theoretical framework which already assumes that infinities can be treated as finite quantities and that there are multiple levels of infinity). Again, more on this later.

But then Feferman reverts to using familiar concepts from set theory and logic to propose (the beginning of) a solution. However, he breaks somewhat from the traditionalist set-theoretical assumption which says that all of set theory can be based on the concepts of classical logic, i.e., the system of logic in which the Law of the Excluded Middle (LEM) is assumed to be always applicable. In particular, he makes use of the ideas of intuitionistic logic, in which LEM is not taken as a universal given, to define “semi-intuitionistic” and then “semi-constructive”15 versions of set theory in which the truth of statements about quantification over definite or non-vague sets or collections is settled by classical logic, while the truth of statements that quantify over non-definite or inherently vague sets or collections, or perhaps uncountable collections, may possibly be settled by intuitionistic logic. He asks of the “statements of classical set theory that are of mathematical interest, one would like to know which of them make essential use of full non-constructive reasoning and which can already be established on semi-constructive grounds.”16 Finally, Feferman says that CH is a meaningful statement in the context of his modification of set theory that he calls SCS + (Pow(ω)), which is his semi-constructive set theory with the axiom of power set added but restricted to the power set operation only of the lowest level of infinity; and then he says that CH is a definite statement, which presumably means that it has a definite truth value in some sense, in the context of his SCS + (Pow), which is his semi-constructive set theory with the full axiom of power set added, i.e., the axiom of power set as applied to all levels of infinity. Then he states that the concept of the power set of ω is “clear enough,”17 but that the concept of an arbitrary subset of this power set is not clear, and that it is precisely this lack of conceptual clarity that makes CH an “essentially vague statement, which says something like: there is no way to sharpen it to a definite statement without essentially changing the meaning of the concepts involved in it.”18

Feferman sees the truth through a glass darkly. He understands that there is some sort of essential vagueness in the statement of CH, but he does not provide any real clarity on the nature and source of this vagueness. Feferman writes, “Presumably, CH is not definite in SCS + (Pow(ω)), and it would be interesting to see why that is so.”19 In other words, presumably his semi-constructive set theory with the power set operation restricted to the power set of the lowest level of infinity cannot contain a definite statement of CH about which we could draw some kind of definite conclusion, which possibly makes intuitive sense given that CH relates the lowest level of infinity to the next higher level of infinity, and perhaps just like first-order logic Feferman’s Pow(ω) operation does not encompass the next higher level of infinity in a way that makes it “useful” in making a definite statement about CH; however, there is a certain lack of clarity in Feferman’s statement, in that, given Cantor’s theorem that the cardinality of a set is always strictly less than the cardinality of its power set, and since the cardinality of the set ω is the cardinality of the infinite set of natural numbers, this means (in standard set theory) that the cardinality of Pow(ω) must be strictly greater than the cardinality of ω; and since Pow(ω) is included as a valid entity in Feferman’s SCS + Pow(ω) set theory, isn’t SCS + Pow(ω) itself already powerful enough to make at least CH “definite,” if not generalized CH? But this lack of clarity, as will be shown, is itself a symptom of an insufficient grasp of both the nature of infinity and the nature of the uncountable, and, in particular, of the nature of the supposed difference between countable and uncountable infinity as represented most memorably by Cantor’s famous diagonal argument.

In his concluding statement that CH is inherently vague, Feferman says, “But to formulate that idea more precisely within the semi-constructive framework, some stronger notion than formal definiteness may be required.”20 This is correct, but likely not in the way Feferman thinks. Feferman states that he is interested in finding a more precise formulation of the vagueness of CH “within the semi-constructive framework,” and the implication is that by using intuitionistic logic in the right way, we may be able to formulate the notion of “vagueness” in a precise manner, i.e., in the form of mathematical symbolism, and with this precise expression of vagueness we may be able to settle CH in a satisfactory way, and, in particular, to prove that CH is inherently vague. But there is a problem here, and it is in the incorrect understanding of the value and usefulness of intuitionistic logic. Intuitionistic logic cannot “settle” the truth of any idea, i.e., it cannot draw definitive conclusions about something – if it could, it would be no different from classical logic. At best, intuitionistic logic can only be used to provide a formal mathematical or symbolic representation of our lack of knowledge about something. Assuming a statement is based on clear (i.e., not vague) concepts, then the more knowledge we gain about the subject matter of the statement, the closer we come to being able to determine definitively whether the statement is true or not, i.e., to determine its truth value classically. There are many statements, such as typical statements about the natural world in science, whose truth value may never be definitively determined in the sense of classical logic no matter how much we know about such a statement’s subject matter, and no matter how confident we are in such a statement’s truth (e.g., the statement “gravity is always an attractive force”), and in this sense we can say that we may never be able to determine the truth value of such a statement in the classical sense. In such a case, we may represent our lack of perfect knowledge using intuitionistic formalism, but doing so does not increase our knowledge beyond what we already had before we took recourse to the intuitionistic formalism. At best, it provides us with a false sense that we have gained knowledge in some way by representing our current lack of knowledge more concretely. This is the case in general when the methods of intuitionistic logic are applied to a statement, and in particular to CH. If CH is inherently vague, as Feferman claims, then perhaps we may be able to represent this vagueness “more concretely” by the methods of intuitionistic logic, but such concretization in no way gives us a clearer understanding of the nature and source of the vagueness; at best, it gives us a false sense of having gained knowledge about the statement being analyzed, because we have invested a nontrivial amount of thought to produce the result, and have manipulated symbols into a form they were not in before, all of which is certainly an achievement, just not the particular achievement we sought. This is not to mention the fact that Feferman’s system of set theory itself already includes at least Pow(ω) as part of its axiomatic framework, and so ω and Pow(ω), which are infinite quantities, are from the beginning being treated in certain ways by Feferman’s system as finite, mathematically manipulable quantities, which, as Feferman indirectly indicates when he says that the concepts underlying CH are inherently vague, is a starting point for flaws, blind spots, and self-imposed limitations on our thinking about and understanding of the subject matter. Given these considerations, though Feferman’s assessment that CH cannot be given a definite truth value is correct, his approach to finding the reason for this will ultimately fail no less than the approach of appending ever stronger axioms of infinity to ZFC in the hope that by doing so we will learn more about the “hierarchy of infinities” and be able thus to use this new knowledge to finally resolve CH. In both cases, though in different ways, the reasoning is based on the unsubstantiated assumption of the validity of the very concepts that make CH vague and that prevent it from having a definite truth value. Such proposals still fall back on the flawed but familiar conceptions of completed infinite sets and different levels of infinity, even in the context of the recognition that there is a flaw in the notion of completed infinity, as in Feferman’s case, and the reason we still fall back on these flawed but familiar conceptions is simply that in our effort to grasp infinity, and to finally resolve CH, we can think of nothing better to put in their place.21

In a later article,22 Feferman again makes certain statements that show he is on the right track, but also that he still does not see how the different pieces fit together. Specifically, he says, “Briefly, mathematics at bottom deals with ideal world pictures given by conceptions of structures of a rather basic kind. Even though features of those conceptions may not be clearly definite, one can act confidently and go quite far as if they are; the slogan is that a little bit goes a long way. Nevertheless, if a concept such as the totality of arbitrary subsets of any given infinite set is essentially indefinite, we may expect that there will be problems in whose formulation that concept is central and that are absolutely unsolvable. We may not be able to prove that CH is one such ... but all the evidence points to it as a prime candidate.”23 Feferman’s general statement regarding indefiniteness and unsolvable problems is correct, and, as with some of Feferman’s other commentary on the CH issue, it touches upon the core of the problem, yet again without providing any clarity on the source of the indefiniteness in the case of CH. He then goes on to outline his proposed logical framework based on intuitionistic logic by which we may possibly be able to formally prove that CH is indefinite, and thus cannot be assigned a truth value. The framework he outlines in this newer article is no different from that outlined in his older article. However, he does report a new result, specifically that “Rathjen (2014) established my conjecture using a quite novel combination of realizability and forcing techniques.”24 He then goes on to describe certain additional consistency and broader set-theoretic applicability results of Rathjen’s finding, and implies that this finding perhaps makes things more manageable by stating that whereas earlier results ω1 or infinitely many Woodin cardinals, Feferman’s and Rathjen’s work shows that similar results can be obtained by the much smaller infinite value of Pow(ω). Feferman concludes with, “I do not claim that his work proves that CH is an indefinite mathematical problem, but simply take it as further evidence in support of that. Moreover, it shows that we can say some definite things about the concepts of definiteness and indefiniteness when relativized to suitable systems; these deserve further pursuit in their own right.”25 We must note here for emphasis that Feferman does not claim to have actually understood the source of the vagueness that is inherent in CH, only that certain results in the context of his SI version of set theory reinforce his belief that the inherent vagueness is real, by supporting the idea, via Rathjen’s 2014 work, that CH is not “definite” in the context of his version of set theory, i.e., that CH cannot be resolved in the context of his version of set theory in terms of classical logic, and, therefore, at best CH’s inherent vagueness can be formally stated in the context of his system’s implementation of intuitionistic logic. But as Feferman himself suggests, this does no more than provide an additional hint that his sense that CH is vague is correct, and does nothing to touch upon the underlying reason for the vagueness, which it is necessary to understand in order to actually resolve CH. As stated above, intuitionistic logic can do no more than provide a formal symbolic expression of our lack of knowledge about something. As such, it cannot be used to directly prove the truth or falsity of any statement. In other words, for any system of logic, whether intuitionistic, classical, or otherwise, the only possible way to prove something is to draw logically valid deductions based on logically valid premises. But in the case that one is able to do this, one has no need for what intuitionistic logic offers, i.e., one is able to state one’s result definitively, in the context of classical logic, that is, in the context of a system of logic that treats LEM as a universal given. Only if one does not have a clear understanding of the subject matter might one think that intuitionistic logic could be useful, in order to perhaps “clarify” one’s lack of understanding. But the reality is that the idea of intuitionistic logic being able to definitively prove anything, including that a particular statement is inherently vague or indefinite, is a contradiction in terms; definitive proof precludes the very nature of and need for intuitionistic logic.

Feferman also makes a comparison between standard set theory and his semi-intuitionistic and semi-constructive versions of set theory (which he call KPω, SI-KPω, and SC-KPω, respectively), and with regard to these he states, “The main result in [one of Feferman’s 2010 articles on the subject] is that all of these systems are of the same proof-theoretical strength… . Moreover, the same result holds when we add the power set axiom Pow as providing a definite operation on sets, or just its restricted form Pow(ω) asserting the existence of the power set of ω.”26 Okay. But note that this is a regressivist argument, not an argument for the independent, i.e., objective, validity of Feferman’s set-theoretic axiomatic constructions; furthermore, the truth of this argument depends on the validity of the axioms and framework of proof theory. Feferman’s result simply states that according to proof theory his SI and SC constructions of set theory and standard ZFC are equiconsistent. In other words, this result is an indication that Feferman’s constructions of set theory accurately mirror standard set theory in essential ways, so that results obtained in Feferman’s constructions, at least in regard to those things which standard set theory covers, may perhaps be treated as valid in standard set theory as well. Such an equivalence should not be construed as providing any reason to believe that Feferman’s constructions of set theory are more true, more accurate, or, in particular, more capable of solving CH, than any other known variant of set theory; at best, we may say that this result shows that nothing in Feferman’s constructions of set theory will explicitly contradict any of the statements of standard set theory.

As stated at the beginning, there is a confusion of concepts that is at the center of why CH is so difficult to resolve. As such, if we take some time to clarify these concepts, we can understand the source of the confusion and provide a satisfactory resolution to CH and generalized CH. It should be noted at this point that certain of the concepts and descriptions to follow will be unorthodox from a traditional mathematical point of view. It should also be noted that certain of the concepts and descriptions to follow may initially seem to be within a certain philosophical tradition, such as, in particular, constructivism or finitism, but the reader, especially one whose philosophical inclinations lean in an opposing direction, is asked to be patient and allow the explanation to proceed to the end without resort to any prior understanding of the often confusing and contradictory natures of the variants of a particular philosophical tradition which this paper may seem to be espousing. For a problem such as CH, which has been resiliently opaque and intractable for such a long time, it should be expected that it will be necessary to resort to certain unorthodox ideas, and certain reinterpretations of familiar ideas, in order to find a resolution. That being said, the argument to follow does not employ any complicated or extensive set-theoretic or mathematical formalism; as a result, it is relatively easy to follow, even for those not schooled in set theory or advanced mathematics.27 And this, too, is expected. When clarity is obtained on a subject, it becomes much easier to understand its essential nature (this itself is what it means to obtain clarity about something), and it is also easier to see when an argument on the subject is off base, or based on flawed assumptions, or will not achieve the desired end. As a result, explanation can be simplified.

We will begin with a basic discussion about the nature of infinity. Infinity is the quality of being not finite, i.e., it is the opposite of “finite,” and this understanding is implicit in important ways in much of mathematical thought, though in other ways it is not. As such, we may understand that if we take a sequence of numbers defined by an easily recognizable pattern, such as the sequence 1, 2, 3, 4, … which is produced by what in set theory is called the successor function, and stop this iteration at any point, we are left with a finite, i.e., a non-infinite, collection of numbers, no matter how large. Such a finite collection of numbers is a finished thing, and it is thus possible to conceive, without logical contradiction, that a single entity, which we may call a set (or otherwise a collection, group, class, or any other similar term), explicitly contains every single one of these items, and, further, that since the number of items is finite, the set itself is finite in extent as well, i.e., it is bounded or circumscribable in a meaningful sense of these terms. Note that “extent” does not necessarily mean spatial extent; in the case of this example it means conceptual extent, though the two are inherently related.28 However, the same argument can be made for such a collection of items or objects in physical space – say, billiard balls: no matter how many billiard balls are added to our collection of billiard balls (assume they are added one at a time, though this is not strictly necessary), if we stop at any point, we have a finite collection of billiard balls taking up a finite amount of space, i.e., having a finite extent in space, no matter how large. As such, it is not a logical contradiction to say that such a collection is fully bounded or circumscribable.

However, this is not the case with the infinite. In fact, it cannot be the case, because by definition “infinite” means “not finite” – any other definition of this term would make it devoid of meaning. Therefore, for a “set” or “collection” to be infinite one would have to not stop at any point in the addition of further items or objects, whether in conceptual space or in physical space.29 This means that an infinite “set” would have the characteristic of being not bounded and not circumscribable in any meaningful sense of these terms. It is therefore a logical contradiction, and therefore an impossibility, to have a finite object, such as a set or collection that we treat in our thought and mathematical manipulations as a bounded or circumscribable or complete entity which can, for example, have relations of various kinds with other such entities and can itself be a member of other such entities, be an infinite quantity. At most we may represent an infinite quantity by a finite symbol, such as ℕ to represent the infinite quantity of the natural numbers, or call this never-ending collection of elements a “set” or an “infinite set,” in order to make our thought about these collections and our mathematical manipulations in certain ways more convenient. There is no logical contradiction in a representation. The problems begin when we start treating this practical conceptual tool as if it were a concrete reality (either conceptually or physically); in doing so, we stretch this practical tool beyond the bounds of its applicability. When this is done, subsequent results will have logical flaws in them, and questions and statements of problems that are made on the basis of this concretization will have the remarkable ability to resist all efforts at solution decade after decade and remain, essentially, always just as opaque and intractable as when they were first articulated. It is this subconscious stretching of a practical conceptual tool beyond the bounds of its applicability that, gradually over time, allows us to become comfortable with the idea that an infinite quantity can actually be a finite, manipulable, bounded, circumscribable thing, that, in other words, there is no contradiction in such a conception, or else helps us develop a mental framework within which we can, for all practical purposes, forget that there is a contradiction, or treat the contradiction as insignificant.30 Part of understanding why CH has not yet been solved in all this time is to backtrack on this concretization in order to return to an accurate understanding of the limitations that should be placed on the use of finite symbols, such as ℕ or  , to represent infinite quantities. It will also be of benefit to come to a better understanding of why there is such a strong desire to make these concretization efforts in the first place, which understanding will make us better able in the future to prevent such concretization from happening, or to recognize sooner when it is happening.31

, to represent infinite quantities. It will also be of benefit to come to a better understanding of why there is such a strong desire to make these concretization efforts in the first place, which understanding will make us better able in the future to prevent such concretization from happening, or to recognize sooner when it is happening.31

Let us now take a moment to reexamine, in light of the preceding discussion, certain key aspects of the items in standard set theory known as cardinals and ordinals. We will also discuss here the concepts of transfinite induction and transfinite recursion. First, we understand that in standard set theory, “Every natural number is an ordinal.”32 We also understand that in standard set theory, “Finite sets can be defined as those sets whose size is a natural number… . By our definition, cardinal numbers of finite sets are the natural numbers.”33 So the definitions of ordinal number and cardinal number include the natural numbers. The difference between the two is that ordinal numbers are the numbers themselves as entities or objects (typically envisioned as being written in order from smaller to larger), and cardinal numbers are the magnitude or size of each ordinal number. For the natural numbers, i.e., the finite quantities, the ordinal and its corresponding cardinal always match, e.g., the ordinal 2 has magnitude, or size, 2; this is, however, not always the case with ordinals and cardinals that are infinite. In set theory, it is common practice to “extend” the sequence of natural numbers into what is called the transfinite, i.e., beyond the finite. It is imagined that we may use a symbol to represent the ordinal number that is one unit beyond the “end” of the natural numbers, and in standard set theory the symbol typically used for this is ω. At first this may seem counterintuitive, but one may accustom oneself to the idea by analogy with how each finite number is merely one unit beyond the end of the sequence of all smaller finite numbers. Also, the idea of an “end” of the natural numbers can be made more palatable by equating ℕ to ω, i.e., defining ω = ℕ, since ℕ is another symbol used to represent the natural numbers as a whole, i.e., we are already accustomed to the idea that ℕ represents an infinite quantity. But once we have grown accustomed to this idea, it is not so great a leap to the idea that we may “extend” the arithmetic operation of addition by one, i.e., of the successor function, which is a well-defined notion in the context of finite numbers, into the transfinite, and thus that it is meaningful to define the quantity ω+1; and once we are at this point, it is easy to see how the same algorithm may be applied again, since 1 is a finite number, and it is well understood how to increase a finite number by one. We therefore must do very little at this point conceptually to convince ourselves that it is meaningful also to define the quantities ω+2, ω+3, ω+4, etc. But this is now clearly a pattern, and since the first ω is defined as the number right after the “final” number of the natural number sequence, we convince ourselves that it is meaningful to take the second term in these transfinite expressions all the way to ω as well and define the transfinite “number” ω+ω, which is also represented as ω⋅2, i.e., the scalar product of ω and 2.34 In this way, we can then define subsequent expressions, such as ω⋅3, ω⋅4, … ω⋅ω = ω2, ω2 + 1, ω2 + 2, … ωω … ωω+1 … ωω2 … ωωω ... ωωωω … .35 But we can go beyond this. We can say that the number after the very “end” of this infinite sequence of transfinite ordinals can be represented by another letter, say ɛ, and then proceed down the same road with ɛ, creating ɛ+1, ɛ+2, etc.36 It is clear that this process can never end, because it is always possible to take the very “end” of whatever sequence of transfinite numbers we are looking at (in set theory this would be called the sequence’s supremum), arbitrarily denote the number “after” this by yet another finite symbol, and start the pattern of increase all over again from that yet “higher” point. We don’t even have to use symbols that are in any of the known alphabets. We could use a glibbor, a letter in a made-up alphabet that no human civilization has ever used and whose symbolic form is a character unfamiliar to anyone in history. We could keep making up new symbols and alphabets after we had exhausted all the known ones, and continue this process until we are blue in the face and our chairs and our desks have completely fused with our bodies, and we still would not have reached, nor could we ever reach, an actual end. The process of evaluating these expressions is known as ordinal arithmetic, which is widely accepted as a valid practice in standard set theory. Nonetheless, the process of ordinal arithmetic is a prime example of what can be called pathological behavior, if we modify the definition a little to say that “pathological” means “an outgrowth, or a symptom, of one or more flawed premises,” rather than what it sometimes means, viz., “a counterintuitive mathematical result that is a symptom of a certain amount of imprecision in one’s founding definitions of one or more terms.” What has caused this pathological behavior is the initial assumption that it is possible, and not logically contradictory, for there to be an “end” to the sequence of natural numbers. Once we deny that there is a logical contradiction here, we may begin to treat this infinity, which here we call ω, as if it is a finite number, which can then be the subject of arithmetic operations. But this is logically impossible, because the sequence of natural numbers is infinite, i.e., not finite, and so there is, and can be, no end to the sequence, no “greatest” or “last” number in the sequence. This then means that there can be no number “beyond the end” of the sequence, and, in particular, that the term ω as used in standard set theory, as well as any arithmetic expression using it or any other symbol that is meant to be equivalent to a completed infinity, is meaningless.

In other words, the ultimate goal of representing infinity by a sequential progression of transfinite ordinals, that is, the goal of conquering, or circumscribing, the infinite so we can feel that we have a grasp or handle on it, can never be achieved by this means. This is a sign of the connection of infinity to the concept of pattern, by which is meant a finite, and therefore fixed in nature, process that can be repeated as often as we like, i.e., that we can circle or loop back to the beginning of and perform again as often as we like. In the case of the natural numbers in canonical order, this process, this pattern, is known is set theory as the successor function. Once the pattern, or finite, repetitive algorithm, of the successor function is understood, we simultaneously understand that the application of it can never have a definite or complete end. In carrying the operation of successor addition over from the finite numbers to the transfinite numbers, we have really done nothing but continued, in a disguised way, the pattern of the successor operation that was well-defined among the finite numbers, while at the same time believing that we have added nontrivially to the store of knowledge about number and quantity. But if we strip from the operations of transfinite arithmetic the flawed assumption that an infinite sequence can have an end, and any implications of this assumption, and make no other changes to our process, we are left with nothing but the natural numbers themselves, and their arithmetic properties and expressions.

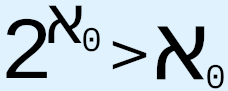

The same can be said for transfinite cardinal numbers. The symbol  is typically used to represent the cardinality of ω, i.e., the “number of elements” in the set of natural numbers considered as a whole, i.e., as a completed entity. In fact,

is typically used to represent the cardinality of ω, i.e., the “number of elements” in the set of natural numbers considered as a whole, i.e., as a completed entity. In fact,  is the cardinal number for every one of the ordinal numbers in the long list of ordinals from two paragraphs ago, which means that every one of these incredibly large transfinite ordinals has, according to this scheme, the same number of elements, and furthermore, these elements are countable, i.e., they all have the same number of elements as the set of natural numbers. This itself is an example of pathological behavior, as defined above. The ordinal ω is then called the initial ordinal of all these other ordinals, because it is the first ordinal in the infinite sequence of transfinite ordinals that all have

is the cardinal number for every one of the ordinal numbers in the long list of ordinals from two paragraphs ago, which means that every one of these incredibly large transfinite ordinals has, according to this scheme, the same number of elements, and furthermore, these elements are countable, i.e., they all have the same number of elements as the set of natural numbers. This itself is an example of pathological behavior, as defined above. The ordinal ω is then called the initial ordinal of all these other ordinals, because it is the first ordinal in the infinite sequence of transfinite ordinals that all have  for their cardinality, and as such ω is sometimes called ω0 instead. But this itself is a contradiction, because ω is supposed to be the ending point of the natural numbers, meaning there are no more natural numbers after this point which can be used to index or identify the “place” in the linear sequence of any further ordinals; and yet each of these incredibly large ordinal numbers can, as a set, still be entirely enumerated by the natural numbers, even after all of the natural numbers themselves, the entire set of finite ordinals, have been completely exhausted in a partial enumeration. Then, by defining what are called Hartogs numbers, we can postulate ordinals that are beyond even the infinite sequence of countable ordinals with

for their cardinality, and as such ω is sometimes called ω0 instead. But this itself is a contradiction, because ω is supposed to be the ending point of the natural numbers, meaning there are no more natural numbers after this point which can be used to index or identify the “place” in the linear sequence of any further ordinals; and yet each of these incredibly large ordinal numbers can, as a set, still be entirely enumerated by the natural numbers, even after all of the natural numbers themselves, the entire set of finite ordinals, have been completely exhausted in a partial enumeration. Then, by defining what are called Hartogs numbers, we can postulate ordinals that are beyond even the infinite sequence of countable ordinals with  for their cardinality, i.e., ordinals with a cardinality greater than

for their cardinality, i.e., ordinals with a cardinality greater than  and thus whose set representations have more elements than the total number of natural numbers. But since the cardinality of these sets is greater than

and thus whose set representations have more elements than the total number of natural numbers. But since the cardinality of these sets is greater than  , we must use a different symbol to represent their cardinality. For this, we use

, we must use a different symbol to represent their cardinality. For this, we use  , which is known as the first uncountable cardinal, and the corresponding initial ordinal is called ω1. We will come back to the difference between countable and uncountable, and why, in fact, there is no such distinction, during subsequent discussion of certain topics, including the important topics of the real number line and Cantor’s diagonal argument. But for now, we may notice that with these definitions and symbol manipulations we have started yet another pattern, this time in the sequence of uncountable cardinalities and initial ordinals. As such, it can be expected that it will be possible to find a way to show that this sequence also can never end, which is what has been done in set theory: based on the definitions of Hartogs number and initial ordinal, as well as, crucially, on the extension of these definitions into the uncountable using the logically flawed concept of transfinite recursion37 (see below), we may conclude that “the Hartogs number of [a set] A exists for all A.”38 In other words, not only can we define ω1 as the first uncountable ordinal, but we can also now define ω2, ω3, ω4, … ωω, … – that is, an infinite, i.e., unending, sequence of initial ordinals, each with a higher cardinality than the previous one. This also means that we may define an infinite sequence of uncountable cardinals, each with a “greater” level of uncountability than the preceding one. But it doesn’t end there. We can then define what is called a limit ordinal, in which we envision that this infinite sequence of ever-increasing uncountable ordinals approaches a limiting point, in the manner of a limit in calculus, where the infinite sequence finally, at “infinity,” converges to the limit ordinal that has an infinitely higher uncountable cardinality than any of the initial ordinals in the infinite sequence that led up to it. But even this does not end the sequence. At greater levels still, there are such concepts as strong limit cardinal, inaccessible and strongly inaccessible cardinal, and various other types of so-called “large cardinals.”

, which is known as the first uncountable cardinal, and the corresponding initial ordinal is called ω1. We will come back to the difference between countable and uncountable, and why, in fact, there is no such distinction, during subsequent discussion of certain topics, including the important topics of the real number line and Cantor’s diagonal argument. But for now, we may notice that with these definitions and symbol manipulations we have started yet another pattern, this time in the sequence of uncountable cardinalities and initial ordinals. As such, it can be expected that it will be possible to find a way to show that this sequence also can never end, which is what has been done in set theory: based on the definitions of Hartogs number and initial ordinal, as well as, crucially, on the extension of these definitions into the uncountable using the logically flawed concept of transfinite recursion37 (see below), we may conclude that “the Hartogs number of [a set] A exists for all A.”38 In other words, not only can we define ω1 as the first uncountable ordinal, but we can also now define ω2, ω3, ω4, … ωω, … – that is, an infinite, i.e., unending, sequence of initial ordinals, each with a higher cardinality than the previous one. This also means that we may define an infinite sequence of uncountable cardinals, each with a “greater” level of uncountability than the preceding one. But it doesn’t end there. We can then define what is called a limit ordinal, in which we envision that this infinite sequence of ever-increasing uncountable ordinals approaches a limiting point, in the manner of a limit in calculus, where the infinite sequence finally, at “infinity,” converges to the limit ordinal that has an infinitely higher uncountable cardinality than any of the initial ordinals in the infinite sequence that led up to it. But even this does not end the sequence. At greater levels still, there are such concepts as strong limit cardinal, inaccessible and strongly inaccessible cardinal, and various other types of so-called “large cardinals.”

Notice that no matter where we stop, no matter how “large” we make our next uncountable cardinal (or ordinal), our implicit assumption is that even though the symbol for the transfinite number represents an infinite quantity, we are still justified in treating it as a finite, fixed, unitary, circumscribed, completed, mathematically manipulable object. And from such treatment it can be immediately perceived that there could be something still larger. This is a one-track kind of mindset, and it is a mindset that is (ironically) limiting, because in thinking that we can come to an understanding of the ultimate nature of infinity by continuing this process of finitizing the infinite, we make it that much harder to recognize and acknowledge in a nontrivial way the logical flaw on which this entire progression is based. In a complex set of interconnected ideas, such as that embodied by set theory and its interconnections with other branches of mathematics, many ideas are likely to be valid and based on sound assumptions and axioms, and in the case of infinity and how the concept is treated in set theory, not all is unsound. However, as we have been discussing, certain key parts of it are unsound, and have been from the moment the idea of the transfinite was introduced by Cantor. It has been said that in continuing the path of searching for ever “higher” levels of infinity, we are operating in the context of a “hierarchy postulating the existence of larger and larger cardinals” which provide “better and better approximations to the ultimate truth about the universe of sets,” and that ZFC is “just one of the stages”39 of this hierarchy. It is not impossible that one of the results of this effort will be a legitimately clearer understanding of the nature of sets and the nature of infinity. But in order for a clearer understanding to actually result from this effort, one of the steps along the way must be the recognition of the logical error of treating infinite quantities as if they were finite. The use of large cardinal axioms, ordinal arithmetic, cardinal arithmetic, and related concepts, in the context of a broader set of logically valid ideas, to uncover the truth about and connections between various mathematical concepts and constructs, may help mathematical investigative work in certain ways, but the more we rely on such flawed concepts, i.e., the deeper we dig ourselves in, the further we remove ourselves from the reality we are trying to describe and understand, and the more the mathematical symbols we manipulate become nothing more than symbols.

Before moving to the next topic, let us briefly discuss transfinite induction and transfinite recursion. Mathematical induction is a method of proof for items that can be placed in a sequence patterned in some way by the natural numbers, whereby we first prove that a statement about the first item in the sequence is true, then prove that if the statement is true about the nth item it must also be true about the (n+1)th item, and from these two results we conclude that the statement is true about all items in the sequence. On the other hand, recursion is the process of performing an operation on an initial input and then taking the output of this operation and making it the next input to the same operation; each time the operation (or function) takes an input, an index sequence is increased by one to keep track of the number of times this single operation has been applied. Now, both operations, induction and recursion, are well-defined when the nth item in their respective sequences is a finite number, i.e., a natural number. But it should be plain from the above discussion regarding the transfinite that when we try to take, for example, the ωth item, or operation, in the sequence, or the (ω+1)th item or operation, etc., we are not doing or saying anything meaningful. The only reason that the process of “extending” the operations of induction and recursion into the transfinite seems meaningful is that we have already accepted the dubious validity of the finitizing of the infinite that began when we assumed that there could be an end to the infinite sequence of natural numbers, and that the “number” just beyond this point could be represented by a finite symbol that can be manipulated meaningfully by arithmetic operations. Once we have done this, it is no great leap to the idea that it is valid also to extend the operations of induction and recursion into the transfinite. But as we have seen, the entire concept of such an extension is based on a logical error, and is thus fundamentally flawed. We may be able to deduce “interesting” mathematical results on the basis of the assumption that there is no logical error in finitizing the infinite, and, in fact, this is what has happened in set theory, with such results forming a significant part of set theory. But these results do not, and cannot, apply to reality, precisely because they are based on a logically flawed assumption, while reality is, and always must be, logically self-consistent.

As in the previous section, we will see here that the, typically subconscious, foundational assumptions that have made CH such an intractable problem are based partly on logical flaws and partly on imprecision in the definition of terms. We will begin our discussion with the topic of the real numbers and the real number line.

Real numbers are envisioned as being points on a straight line in order, from left to right, of increasing magnitude, such that every possible magnitude or fraction of a magnitude is represented by a point on this line, i.e., by a real number. A point on this line, i.e., the position of a single real number on this line, is approximated by a single dot that may be made by the tip of a pencil, though an actual point on the real number line is solely a position and has no breadth, width, or length, unlike a point that may be made by the tip of a pencil. As such, a point on the real number line cannot be said to exist in the physical sense. However, even in the conceptual arena in which a point on the real line is understood to be nothing more than a position, at a certain important level it cannot be said to exist in this sense either, and this is the beginning of a clarified understanding. Start by imagining the closed unit interval [0, 1]. If we arbitrarily assign each unit interval on the real number line the Euclidean distance of 1 in., then we may say that this unit interval has a 1 in. magnitude (i.e., length) in Euclidean space (or, equivalently, that its Lebesgue measure40 is 1 in.). If we divide this unit interval into two parts by a perpendicular line at a fixed point, and then take one of these two parts and divide it, and keep this process going, after a certain number of divisions the practical tools that we may be using to make these divisions (if we are using tools of some kind to actually divide a line that we have drawn) will reach the limits of their applicability and will no longer allow us to make further divisions; but conceptually we may imagine ever-increasing degrees of magnification on smaller and smaller stretches of the real line so that we can keep making these divisions for as long as we like. Imagine that after each division, the length of the stretch of line that we choose for our subsequent division is less than half the length of the the line segment we just divided. This process, as stated, can never end, precisely because there is no non-zero component of length that is the smallest possible length that one can reach; and the reason for this is that the definition of length implicitly includes the concept of infinity, such that, as with the natural numbers and their infinite increase, no matter how many times we choose to divide the real line, we will always be able to divide it further using the same pattern, the same function, operation, process, etc., as we have been using. Another way of stating this is that because every position at which we divide the real line is a position only and has no extension, however small, in any direction, a linear accumulation of such points, no matter how many of them we accumulate, will never become even the tiniest fraction of a non-zero length accumulation, i.e., of a line or line segment. We may imagine accumulating an infinite number of these points (which, as discussed above, can never actually be done since “infinite” means “without end” or “without bound”) and trying to place them next to each other to create a line, but even “after” such an infinite accumulation, there will still be literally nothing, since a point, which is of zero extent in any spatial direction, cannot add any length to anything, and in particular cannot add any length to another point. But then, there is still the seemingly intuitively valid idea that if enough real numbers are accumulated linearly they will create a non-zero, positive Euclidean length measure, so that, for example, if we draw a straight line that is supposed to be a continuous linear accumulation of real number points of 1 inch in length on a piece of paper, it can be measured against a ruler and found to be precisely 1 in. There is something incongruous about this.

The problem of understanding the continuum, i.e., the set of real numbers, typically thought of as being arranged in continuous linear fashion, has been a celebrated problem in set theory and analysis for over a century, beyond just the CH question. This in itself indicates just how difficult it is to pin down precisely what we mean by the real numbers and the real number line. There have been numerous definitions of real numbers, such as the familiar decimal representation, that based on Cauchy sequences, that based on the concept of a Dedekind cut, or the concept of a complete ordered field. Each of these definitions attempts to come to terms with the fact that though we envision the reals as being placed next to each other to make up a line (of infinite length), each number itself is only a position on that line and has no length of its own with which to extend the line. Nonetheless, each fixed, unchanging “point” on the line is defined by a single real number, and the real number is thought of as being synonymous with that fixed point, i.e., with a fixed and unique quantity or magnitude if we have imposed a Euclidean distance metric onto the line. In the case of a number whose decimal representation is finite, it is easy to see, in finite terms, how this number fits on the real line – for example, the fraction ½ has a decimal representation of 0.5, and it is no difficult task to see how this value of 0.5, as a finished, fixed quantity, can be marked on the real number line. But what about the fraction ⅓? This is, of course, a rational number, since it is a ratio of integers. But its decimal expansion, though it does repeat, never ends: 0.33333… . Is there any significance to this difference between these two rational numbers? In fact, there is. It is that the position on the real line of the number ⅓ when this number is represented by its decimal expansion cannot be determined in a finite way. Certain definitions of the reals express this by using the concept of a limit to define such real numbers, though this is typically done only for the irrational numbers, i.e., numbers whose decimal representations neither repeat nor terminate and thus which cannot be written as ratios of integers. And this is the key difference. The decimal expansions which are finite, i.e., terminating, are equivalent to numbers, i.e., finite, fixed quantities on the real number line; however, the decimal expansions which are not finite are not equivalent to numbers, because they are themselves not fixed points on the line; rather, each is an always-moving sequence of points that gets closer and closer to a fixed limit point as more and more numbers are added to the end of its decimal expansion, with the decimal expansion never actually reaching the fixed limit point. In fact, it is the limit point itself that is the number, not the decimal expansion, which can only ever be an approximation of the number. Here we must recall the definition of infinity given above, viz., the quality of increasing without bound. A number is a definite point on the real number line; that is, it is finite. A non-terminating decimal expansion is, as its name states, non-terminating, and thus contains within it an infinite sequence of numbers, and with each number added to the end of this sequence the approximation to the limit point being approached by this sequence changes position on the real line. The essential conclusion here is that because the sequence is infinite, it never stops changing position on the real line – no matter how many digits are added to the end of the expanding decimal sequence, an infinite number of digits can be added beyond them. In other words, if a number is defined to be a fixed point on the real number line (and it makes no sense to say that a number on the line is anything other than a fixed point), then since an infinite decimal expansion that leads up to, but never reaches, such a fixed point is itself not a fixed point on the real line and never can be, it is not appropriate to call such a sequence a number at all. In other words, finite, fixed quantities such as 300, -186.8979, -19, 0.33, 1, 1.5, 2, 2.4, 2.9, 3, 3.141, 4, 5.099, etc., on the one hand, and infinite decimal expansions, such as -9.44444…, 0.33323332…, 0.25252525…, 2.71828182845904…, 3.141592653…, on the other, are not the same type of mathematical object. To be clear, the limiting values of the in-finite decimal expansions are themselves finite quantities, since it is a well-defined conception that does not run afoul of logical errors to conclude that each of these limiting values has a single, fixed position on an imaginary straight line that has an arbitrarily marked reference point, and as such it is appropriate to call the limiting values numbers. But it must be emphasized that the infinite decimal expansions themselves are not equivalent to the limiting values, and never can be, because, as stated, infinity means to never end, and thus the decimal expansions themselves can never reach the limiting values.

But this analysis is missing something. If, as stated above, no amount of points could ever produce any length beyond zero, then how is it possible to say that there could be a continuous, i.e., without gaps, linear sequence of these points such that we can mark off varying positions of Euclidean distance on this linear sequence that correspond to different lengths or numbers? And here we get to the crux of the matter. There is a psychological conflation of these two concepts, the concept of Euclidean distance and the concept of an infinite linear sequence of points of zero length that can somehow create positive length in their linear aggregate and can thus be made to correspond to the familiar notion of Euclidean distance. But the reality is that, as stated above, no amount of points of zero length could ever be made to aggregate linearly to anything more than zero length. It is a logical error to believe otherwise. In our conflation of these two concepts in, e.g., calculus and differential equations, or even in basic algebra when, e.g., we are graphing a polynomial in the Cartesian plane, we are able to gloss over and effectively ignore this logical error, and thus treat these two concepts as equivalent, because these branches of mathematics do not need to be precise enough for this logical error to become relevant; they can, in other words, do all that they need to do based on the high degree of similarity in certain relevant respects between the concepts of linear Euclidean distance and continuous geometric lines, on the one hand, and the, as we must emphasize, logically imprecise, concept of the “real number line,” on the other, without having to take account of the fact that at their core these two things are not equivalent, and never can be. We may say, for example, that the irrational number π is clearly defined as the ratio of the circumference of a circle to its diameter. So how is this not a number? But this question confuses a fixed Euclidean length or distance with an infinite, ever-changing sequence of numbers. The ratio itself is finite and fixed, and thus equates to a single well-defined magnitude, but the decimal representation of this number will never end, i.e., it will never reach the equivalent of the magnitude of the ratio’s Euclidean distance. We believe we are using the symbol π to represent one thing, but this finite symbol really represents two distinct things, a finite, and thus fixed, Euclidean distance and an infinite sequence of digits, that have been conflated and are treated as if they were the same thing. These two things are similar enough out to the appropriate finite number of decimal places that we can make accurate approximate calculations based on the formula C=πd with our scientific tools, and thus similar enough that we can simply gloss over the fact that one of the things that the π symbol in the formula C=πd represents is an infinite, and thus always changing, sequence of digits. Therefore, we can also gloss over the fact that it make no logical sense to try to multiply this infinite sequence with the fixed, finite diameter of a circle in order to obtain the circle’s fixed, finite circumference, since for the concept “multiply” to have any meaning the numbers being multiplied must both be fixed quantities, i.e., they must be represented numerically in their finite form, if they have one – in the case of rationals, as fractions, or alternatively as decimals if their decimal expansions terminate.41 This goes for the values of C and d as well – if these are non-terminating in their decimal expansions, then when we make concrete calculations with them, at most we will only be able to calculate using finite approximations of these numbers, not the numbers themselves, except in the case of such numbers as can be exactly represented in numerical form as ratios of integers. And yet, as we have said, the limit points of such non-terminating decimal sequences are finite, being fixed positions on a geometric line, and these limit points represent, from a 0 reference point, the exact values of the fixed magnitudes, whether the numbers are rational or irrational. This precise distinction is not relevant for much mathematical work, but for CH it is essential.

The foregoing is the most counterintuitive statement in this entire paper. We are so used to equating the infinite decimal expansion, which when thought about or written is necessarily finite, typically with an ellipsis at the end which is intended to mean “all the rest of this infinite sequence of numbers,” with the fixed, finite limiting value that our approximate, ellipsized version of the infinite decimal expansion is supposed to represent. But there is a fundamental difference between these two things, the one having an essentially infinite character, the other having an essentially finite character. In fact, the fact that we are already comfortable with such equating in the realm of individual numbers with finite magnitudes makes it that much easier to ignore the logical error in treating the natural numbers in their totality as a finite thing, and makes it that much easier to accept other logically flawed ideas and conceptions beyond this, such as the idea that there could be such a thing as a “limit ordinal,” or that there could be numbers even beyond this. It is important to note again that the concept of a limit itself is not being challenged, and, as we understand from calculus, analysis, differential equations, etc., the concept of a limit is useful in a wide variety of circumstances, and produces correct results. The point here is not to challenge any of these results, but rather to spotlight an essential misconception in certain of our foundational ideas that, while irrelevant to many mathematical pursuits, is nonetheless highly relevant to others, and, in particular, to CH. The logical error which causes the misconception that an infinity, such as the natural numbers, can be treated as if it were a completed entity and manipulated in various ways as such, is the same logical error that leads us to believe that an infinite decimal expansion can be treated as if it were a finite number, rather than as what it is, viz., an infinite sequence of smaller and smaller magnitudes which approaches a finite number, but which never actually reaches it. We may say then that the practical conceptual tool in mathematics of treating an infinity as a finite thing, such as we do in, e.g., integration and differentiation in calculus, has certain bounds of applicability, and within these bounds this equating of an infinity with a finite thing does not interfere with the soundness of our results because our results do not in any way directly or essentially rely upon or make reference to the logical error inherent in such equating, so that this equating is used in such cases solely as a convenience to make mathematical work easier. Another way of saying this is that the process of taking a limit42 involves a smoothing over and ignoring of the logical error of equating an infinity with a finite thing, and that this is acceptable in many circumstances because the practical results of such an operation with regard to things in the real world that we might wish to measure are seen to accord as accurately as we like, subject to the limitations of our scientific tools, to the process of successive finer and finer approximations to a limiting value as expressed formally in the mathematical concept of a limit, and also because a limit itself is nothing but an expression of a pattern that has been found in an infinite sequence. In other words, we do not need to take the actual limit (which is impossible) in the real world which we are measuring and trying to understand, but are able to feel satisfied with a finite, though perhaps highly accurate, approximation, or with the fact that we can see a pattern in the infinite sequence, which then allows us to see whether the sequence approaches a finite number or not, and in the former case to know the finite number which the sequence ever more closely approaches. We then see this high degree of accord between the practical measurements and the idealized mathematical expectations, and, given that such accord is the primary goal in our mathematical pursuits (i.e., in the practice of mathematics we seek, either directly or indirectly, to describe and explain the real world’s essential logical makeup and the behavior of matter within this context), we simply do not think further, or at all, about the essential flaw in equating an infinity with a finite thing, since such considerations in this conceptual and practical context are irrelevant. But because, for powerful emotional reasons, we desire absolute certainty to the extent we can possibly obtain it, we then go back to the underlying mathematics and define things even more precisely with concepts like Dedekind cuts and Cauchy sequences, and this added “precision” makes us feel that much more confident that our equating of infinities with finite things is justified, which then makes it easier in our investigations in other areas to ignore or downplay the significance of this problem.

But any logical error in our premises will eventually show itself, often well before we recognize it for what it is. When statements, such as CH, are made that directly relate to or essentially depend upon the logical error, and whose meaning and significance and nature can only be understood after recognizing the logical error for what it is, i.e., whose solution cannot be obtained by a “reasonable approximation” that glosses over the logical error, then we have a different sort of beast, and then our investigations in the context of the systems of mathematical thought that produced such statements and that are themselves partly based on this logical error must be understood as being partly based on a logical error, so that we may come to step outside these systems and learn to see things from an unconventional, and more accurate, angle.

We will next briefly discuss the concept of measure. The concept of measure in mathematics is a generalization of the familiar concepts of length, area, and volume to n dimensions (as well as of other things, such as “magnitude, mass, and probability of events,”43 etc., which we will not discuss here). As such, it attempts to extract and preserve the essential properties across all these familiar concepts and express them in the language of set theory, e.g., the measure of the sum (union) of two sets must be equal to the sum of the measures of the individual sets, or the measure of the empty set (i.e., {}) must be 0, or the measure of a smaller set must be smaller than the measure of a larger set. In a particular formulation used in set theory which has relevance for CH, the measure of a single-element set is defined to be 0, and the measure of a countably infinite union of sets (i.e., a union of sets whose total number equals that of the natural numbers) is the sum of the individual measures of each of the sets.44 “A consequence of [these two parts of this definition of measure] is that every at most countable [i.e., finite or countable in number of elements] [set] has measure 0. Hence, if there is a measure on [a set], then [the set] is uncountable. It is clear that whether there exists a measure on [a set] depends only on the cardinality of [the set]… .”45 We should note also that a key part of the definition of measure for a set is the maximum value that a measure can be. For convenience, Hrbacek, in their definition, make the maximum possible measure of a set, whether finite or infinite, equal to 1, though, as they say, this is only a convention: other measures, such as the “counting measure,” would have an infinite set have a measure of.46 Further, Hrbacek mention the open question “whether the Lebesgue measure47 can be extended to all sets of reals,” relating this to what they call the measure problem, a technical question regarding the definition of measure given, and then go on to say, “The measure problem, a natural question arising in abstract real analysis, is deeply related to the continuum problem, and surprisingly also to the subject we touched upon [earlier] – inaccessible cardinals. This problem has become the starting point for investigation of large cardinals, a theory that we will explore further in the next section.”48 This definition of measure and the subsequent comments show key signs of the essential logical error underlying modern set theory’s investigation of infinity, in that (a) the definition has been made in such a way as to reflect the underlying feeling that, somehow, it just feels right to make all at most countable sets have measure 0, while any measure above 0 must correspond to an uncountable set, and (b) the comments that Hrbacek make have shown that this area of investigation, i.e., that of understanding the relationship between the familiar concept of Euclidean length or distance, on the one hand, and the set of points that make up the real line, on the other, is particularly thorny in set theory and analysis; in fact, it is foundational.